Articles

-

Meeting Maria Ressa (Again)

February 17, 2025

I first met her before she was imprisoned.

I was 17. She was giving a talk in a local university about social media and disinformation. Before the 2016 US elections, she had sounded the alarm on disinformation spreading in the Philippines that influenced our elections and propelled Duterte that led yet another failed war on drugs and a successful war on the poor.

-

Aligning Models w/o Alignment: How Reliable ML can help in AGI Safety

September 26, 2021

It is very easy to make wrong conclusions if one does not look at the bigger picture.

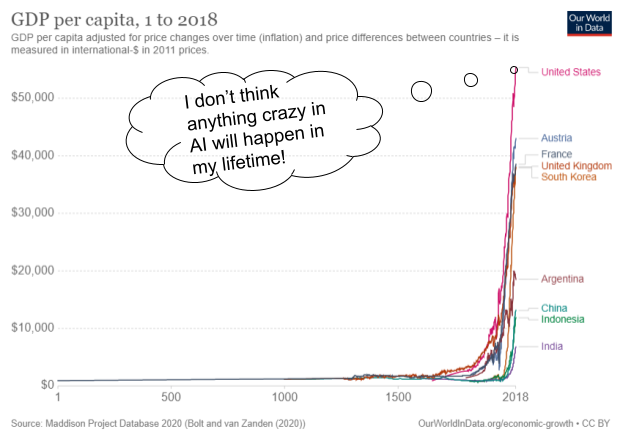

It is very easy to make wrong conclusions if one does not look at the bigger picture. Progress in AI will explode in the coming decades as more effort and resources are poured for its advance in the same capitalist system that allowed GDP per capita to rise at a rate never before seen in human history within the past century. With an increase in AI capabilities comes an increase with its dangers. Artificial General Intelligence (AGI) Safety considers the impacts of AI in the long-term (decades to centuries) of AI models capable of generalizing to domains not specified in training. However, AGI Safety (safety) is still a niche topic within current machine learning research. If it does get treated seriously as a research area, it is often limited to extremely well-funded organizations based in Western countries (e.g. OpenAI, DeepMind in the US, UK, respectively). How can I, an undergrad based in Asia without any connections to any of these organizations, possibly do technical work in AI Safety?

-

How I got a Free Dinner with Doctors from all-across Asia

April 14, 2020

I got off from the bus and proceeded to walk for half a kilometer. ‘405 Havelock, Furama Hotel. Turn on the second right’ I kept muttering to myself so I didn’t have to check my Google Maps again. As I was nearing my destination, I glanced on top of the buildings. I saw the bright golden words ‘FURAMA RIVERFRONT’ which impulsively made me brisk walk. I was, after all, late.